Enterprise security!

Kubernetes is a platform for hosting containerized applications in a scalable environment. For several years, Kubernetes has been the first choice for companies that use these containerized applications. These applications run in an isolated environment, separate from other applications, and contain the OS (usually Linux-based) and the application code in one package that is deployed as a whole. One big advantage is that the OS and application code are always as a whole in every environment where you deploy them. So there are no surprises with obscure bugs that you might encounter because, for example, the production server is running a .NET Framework version with a few hotfixes less than the acceptance environment.

When Kubernetes was relatively new, setting up such a Kubernetes cluster was a specialized job. The manual setup involved installing various components on different (virtual) machines, which was a job for experienced system administrators (see: link). Fortunately, various cloud providers such as Azure have now simplified this process to simple one-command deployments, where a complete cluster is set up within minutes according to the cloud provider’s guidelines.

Are the Kubernetes environments that are set up also secured at the highest level? Well… no, not especially. One of the most critical components of a Kubernetes cluster is the Kubernetes management API. This is used to give Kubernetes commands, usually via “kubectl” or a similar tool. See link for more information about this API. Did you know that when Azure first offered the Kubernetes Service in 2017, this API was completely open to the entire Internet? The Kubernetes management API was secured with an authentication layer, but malicious users could still search for vulnerabilities because the endpoint received all traffic from the public internet without a firewall in between. In late 2019, Azure introduced the ability to allow only specific IP addresses (see the announcement: link), and that is now the default when a new Azure Kubernetes Service is set up within your Azure subscription.

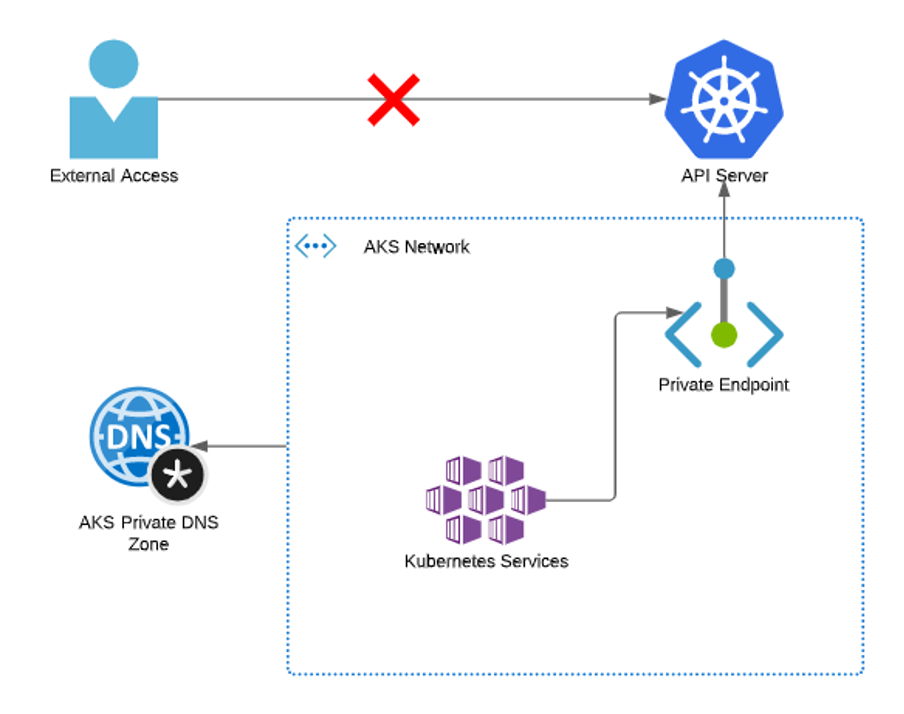

But the Kubernetes management API is still partially open to the public Internet, albeit with an IP restriction. This still means that the API is vulnerable to any vulnerabilities in, for example, the firewall because the endpoint still has a public IP address.

Therefore, since 2020, there is also the option to inject the management API as a Private Link in the Virtual Network (VNET) that you manage and don’t have it publicly open to the internet. In this way, all traffic between the virtual machines that together form the Kubernetes cluster and the management API is entirely private, and there is no possibility to access the Kubernetes management API directly from the public internet. So, it is a very secure solution, especially in enterprise environments where the security of your applications and data is of great importance.

- The easiest way is to use “az aks command invoke” (see: link) to perform “kubectl” or “helm” operations. With this option, a pod is started within the cluster with your given command, so the possibilities of what you can do are limited to the security configuration within your cluster. You also need additional resources in your cluster to run the pod, and it’s not the fastest executing

- A separate virtual machine (Linux or Windows) running in the same Virtual Network (or a VNET with peering to the VNET where the Kubernetes cluster runs) that you can log in to and communicate with the Kubernetes management API. Use the Azure Bastion service to prevent the VM from having a public IP, as this would be a new security risk. Also, you need to keep the machine up-to-date with security patches to ensure that this VM does not become a weak point in your infrastructure security (even if it does not have a public IP). Furthermore, you need to ensure that a limited number of users can log in with their own Azure AD account and that the actions are logged.

- A VPN connection to the VNET where the Kubernetes cluster runs to access the Kubernetes management API. There are also various options for this, but the most accessible and recommended option is to use an Azure VPN Gateway and then the (more expensive) variant where you can use OpenVPN in combination with Azure AD authorization to manage who can use the VPN connection. One big security risk with this option is that you are giving a (local) machine access to a shielded and controlled VNET where important applications and data are present. A (local) machine is more susceptible to malware and viruses that then have direct access to these important applications and data!

- Use Azure Cloud Shell (link), configuring it to provide access to the VNET where the Kubernetes cluster runs. Azure Cloud Shell is a managed prompt where you can run Azure-related commands, with the big advantage that it does not incur additional costs or management burdens. By means of some additional infrastructure (such as an Azure Relay), you can link this Cloud Shell environment to your VNET, as explained here: link. Experience learns that this option is very interesting, but that it is not yet fully developed: it takes several minutes to start the Cloud Shell, and sometimes the connection to the VNET cannot be established and you have to try again until it succeeds.

Each option described above has it’s pros and cons, but the expectation is that there will be even better options to manage a ‘private Kubernetes cluster’ in the future.

As a final note, there is one more thing to consider when the Kubernetes management API can only be accessed within a VNET: how can you deploy a new version of your application on the Kubernetes cluster from a CI/CD pipeline, such as Azure DevOps? Using the pipeline agents managed by, for example, Azure DevOps is not an option, as they can only access virtual machines and services via a public IP. The solution is to use “self-hosted Azure DevOps agents” (or agents from another CI/CD service) within the VNET where the Kubernetes cluster is running. Even better is to place these agents in a separate VNET and connect it to the VNET of the Kubernetes cluster via peering (hub-spoke model). This means that you need to create and manage the agent yourself (for installation instructions you can check here: link). For security and manageability reasons, you should ensure that administrators and developers cannot log in to the agent, and that the agent updates itself with the latest OS updates. You should also install the tools you need during the deployment of your application (such as “kubectl”), so make sure to provision this agent via Terraform, Ansible, or a similar tool, so that you can always set up and update the agent in the same way. Additionally, ensure that in the CI/CD pipeline, this agent only has the task of deploying the application or microservice in a Kubernetes cluster, and not executing the entire build process of an application through the same agent. For this, it is better to use the build agents hosted by the CI/CD service provider, such as the Microsoft-hosted agents of Azure DevOps, as they contain more tools and are always up-to-date with newer versions.

As you have read, there are quite a few things to consider when setting up a truly secure environment for your applications. Securing the Kubernetes API is just one of the concerns you have when setting up a modern platform on which to run your company’s applications and microservices. There are still other things to consider when setting up such a platform.